Research

You can find my publications on my Google Scholar Profile

Overview

I am interested in developing AI agents (and/or robots) that can collaborate coherently with humans. Currently, I am working on two of the core problems:

- Persistent and interpretable policy updates based on human feedback (how AI agents can adapt to humans)

- Human behavior modeling (how AI agents can understand humans)

I believe these two problems complement each other (representing the explicit and implicit communications from the human to the agent respectively) and are fundamental to human-AI / human-robot interaction. Many other open questions in algorithmic human-robot interaction can be reduced to them. For example:

- End-user programming: It is essentially humans using commands as feedback to update the agents' policy.

- Human-agent mutual adaptation: Joint adaptation is complicated as the agent has to learn the task, the human's policy, the human's current belief of the agent... An easier alternative is to have the agent reveal its current policy, let the human decide how the work should be split, and assign the agent's role through policy adaptation.

- Objective alignment: As it is extremely challenging to fully specify the intrinsic objective function of the human in one go, it is easier to iteratively adapt the policy of the AI agent to align with the human's true objective.

- Safety: Persistency entails no repeated mistakes (safety in seen environments), and interpretability enables formal verifications of the agent's policy (safety in unseen environments).

To solve the first problem, I aim to draw inspiration from the cognition level (not neuron level!) of human intelligence to inform the design of AI agents. At the moment I am particularly interested in the use of Cognitive Architectures with the help of Large Language Models.

To solve the second problem, I have accumulated experience with different deep learning backbones (e.g., multi-modal transformers, random forest, etc.) and feature engineering methods.

Projects

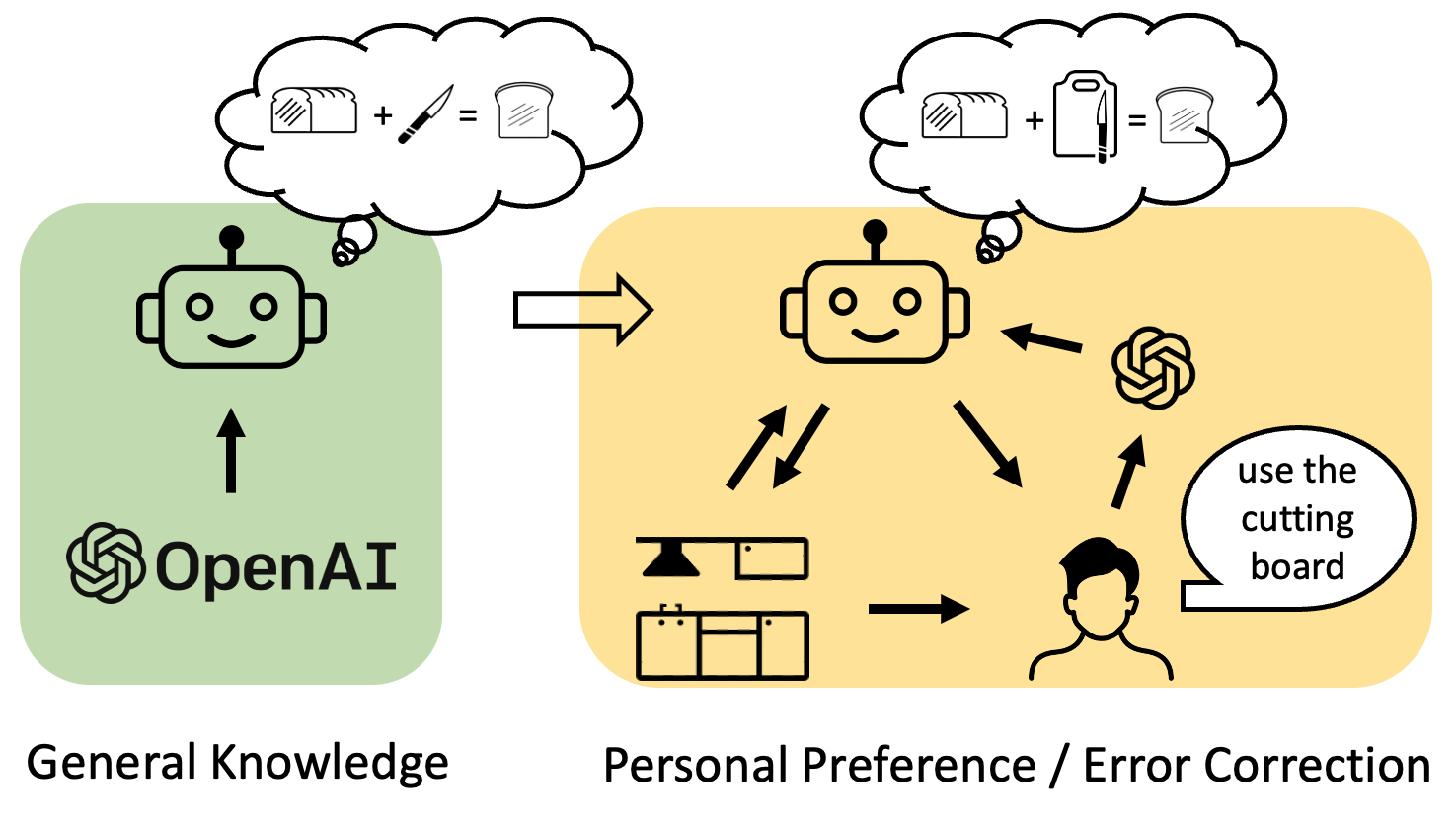

| [Policy Adaptation] [Honors Undergrad Thesis] Incorporating Instructive Feedback in Human-AI Collaboration [AAAI 2024] [AAAI 2024 appendix] [AAAI 2024 code] [poster] [3 Minutes Thesis] | |

| Cognitive architectures support one-shot persistent updates, systematic generalization, and interpretability. However, most previous work relies on hand-coded production rules, limiting their application. In this work, we already utilized the code-generating capability of GPT-4 to automatically generate a cognitive agent. For the next step, we will exploit the production system in cognitive architectures to allow the users to update the agent's policy to suit their own preferences. |

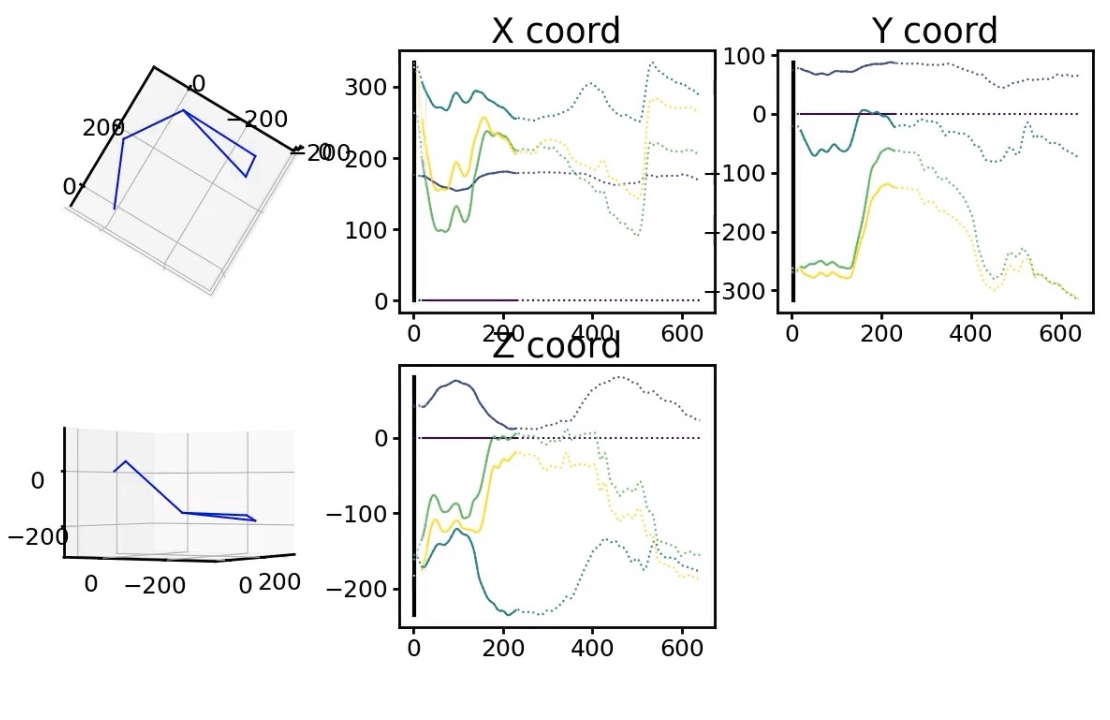

| [Human Modeling] Home-based Stroke Patients Functional Evaluation [slides] | |

| Many stroke-induced behaviors are local and action-agnostic. Healthy subjects do not demonstrate stroke-induced behaviors but the parts of the motion of a stroke patient may be the same as a healthy subject. Based on these two insights, we developed a local feature descriptor using the weakly labeled trajectory of stroke and healthy patients. This provides a more detailed functional evaluation of what specific motion is difficult for the stroke patient and what type of tasks they might have trouble doing. It also shows some activity of daily living is more suitable to be used for evaluation than others. Additionally, we also built an LSTM model to regress the Fugl Meyer assessment of the patient yielding 88% accuracy compared to trained physical therapists. |

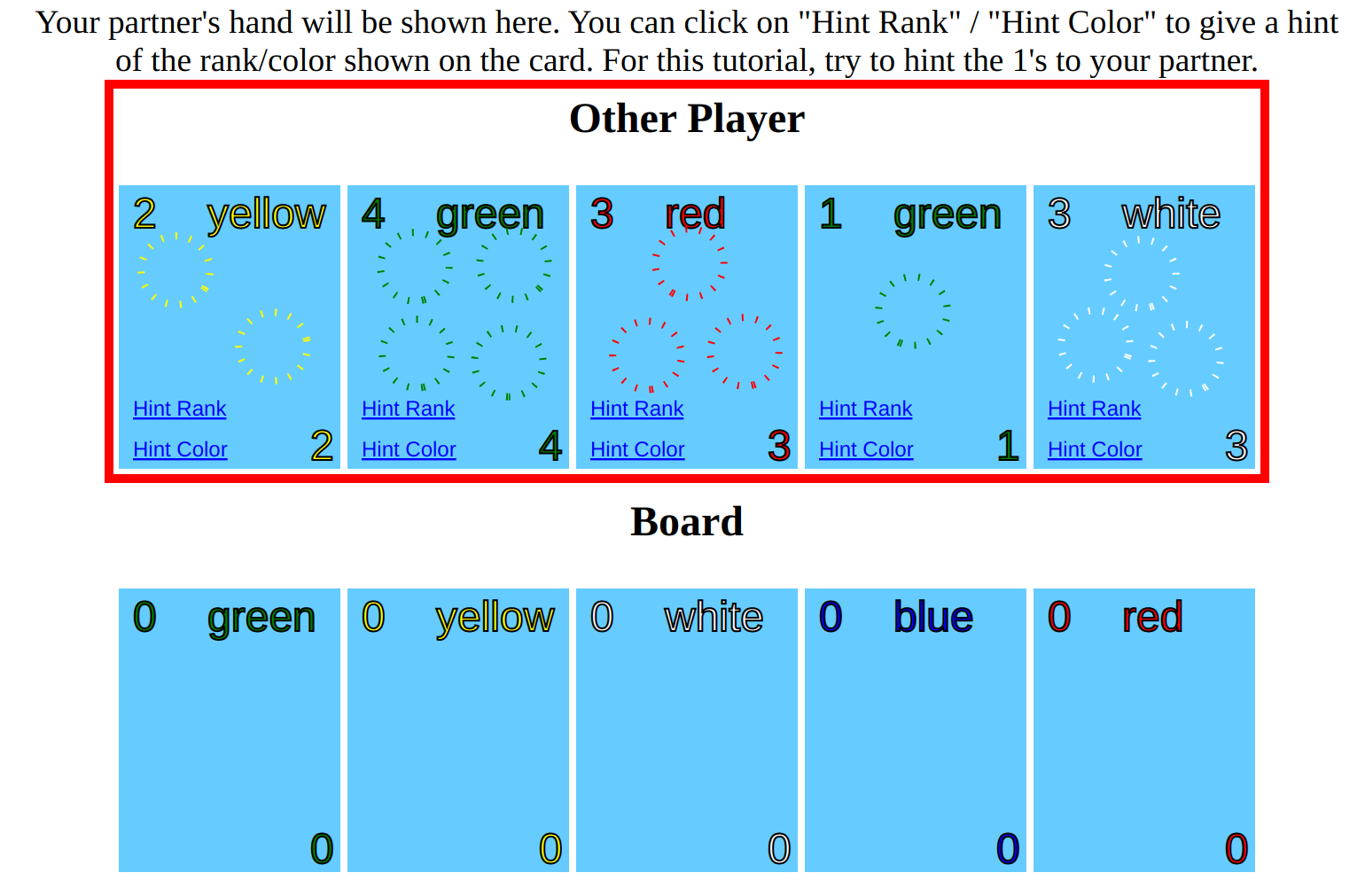

| [Policy Adaptation] Rapid Adaptation to Human Partners in Hanabi [code] | |

| There are multiple equilibria in the cooperative board game Hanabi, and it is important for the two players to commit to the same strategy / convention. We fist built a library of potential human-like strategies using decision trees and reward-based backbones. Then we used Bayesian inference to estimate the partner's strategy and match it with the most compatible strategy in the library. We showed that this approach can reliably estimate the partner's general strategy within a few turns in the game. This can be applied to other tasks where the agent needs to implicitly estimate the convetions of the human partner. |

| [Human Modeling] Action Anticipation in Ego-centric Kitchen Videos [report] | |

| Action anticipation aims to predict human behavior in the immediate future given some past observations. Yet the current mainstream encoder-decoder approach does not yield good performance due to two main challenges: 1) how to align the action space with the observation space, and 2) how to represent different hierarchies of actions. We proposed to explicitly encode the pre-/post-condition of actions which helps constraints the space for potential future actions. This partially inspired my future work in cognitive agents. |

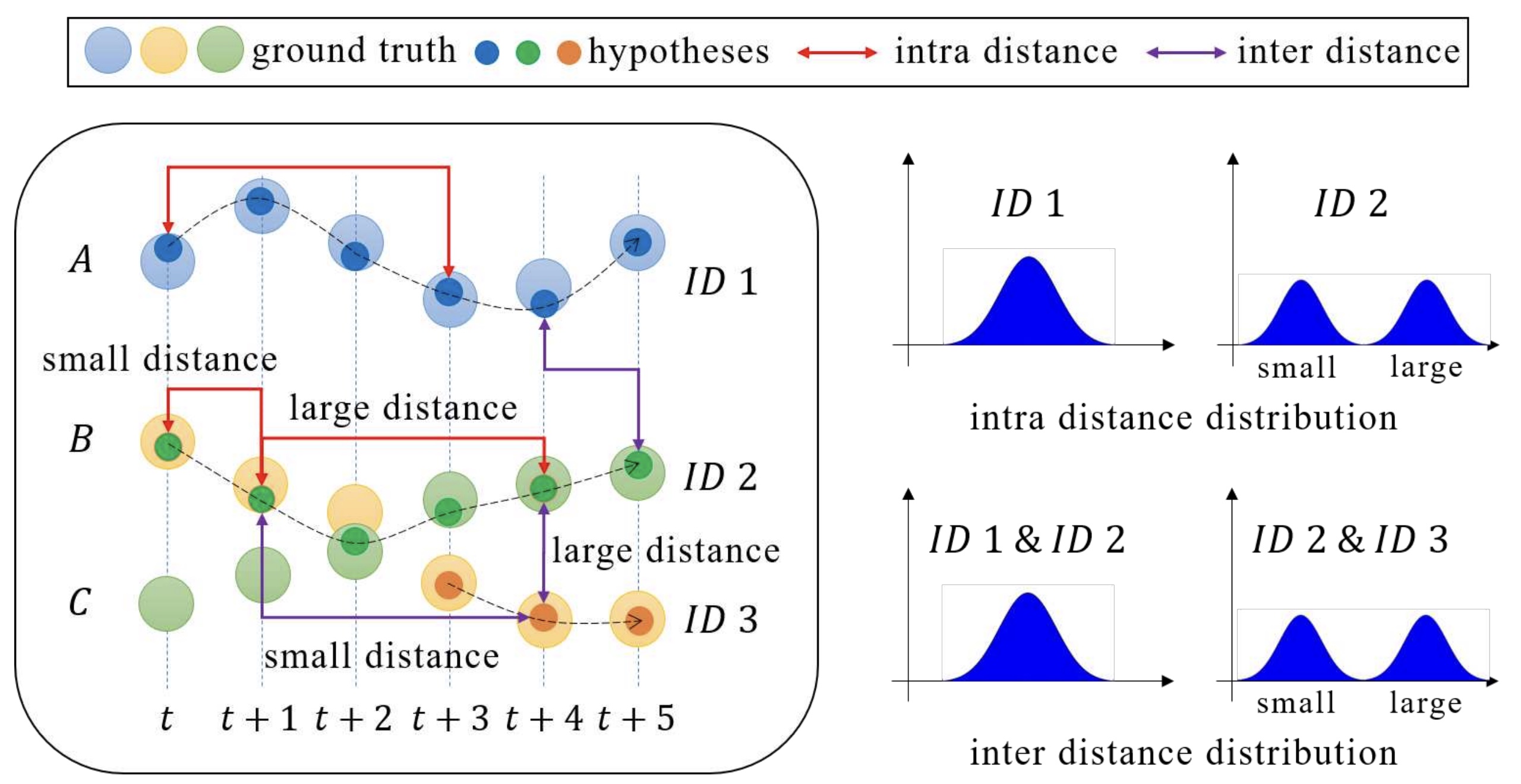

| [Human Modeling] Pedestrian Tracking Test Time Augmentation [CVPR 2020] [video] | |

| Visual consistency within a trajectory and between trajectories reflects the quality of a tracking algorithm output. Based on this simple observation, we designed an evaluation metric for parameter optimization without the need for ground truth annotations. This allows any tracking algorithm to be further fine-tuned to the specific environment after being deployed. We showed a 5.8% performance increase with this test time augmentation technique without modifying the tracking algorithm itself. This is useful when the conditions (lighting, pedestrain walking speed, etc.) in the deployed environment is very different from the training set. |